There are a handful of semi-universal truths about LLMs that, once internalised, will significantly reduce your log error; some of them remain outside of the reach of even some of the field’s luminaries.

You should be fine, however: while you might not have their experience or accolades, you also lack their baggage of favourite frameworks months behind in rent or late-apocalyptic cognitive dissonance1.

I’ll structure this series a bit like a paper club: each instalment will analyse a recent paper, and build the groundwork for the aforementioned truths one solid, uncontroversial rebar at a time.

The Cursed Reversal

"Reversal Curse", or “Tom Cruise mommy issue” (after the most cited example) is a term purporting to describe a situation where large language models can't generalise a learned relationship from one direction to its logical reverse. Put simply, if a model learns that "A is B," it often struggles to infer "B is A."

For instance, an LLM trained on "Olaf Scholz was the ninth Chancellor of Germany" might fail when asked "Who was the ninth Chancellor of Germany?", and this limitation is presented as a failure of the model's basic logics skills. Only half true: it is someone’s failure of deduction, no doubts about that.

The paper2 made quite a splash, claiming to “Expose a surprising failure of generalisation”

In the intervening time, this alleged discovery has been touted as definitive proof of LLMs having “hit the wall” throughout the full spectrum of the AI opinion-haver community: both Emily Bender and Gary Marcus, for instance, had the dubious honour of being deterministically parroted in thinkboi Alex Hern’s fancy livejournal.

Human, although Humean

Far from being surprising or unique to LLMs, this phenomenon is well-documented among scholars of human cognition (or should be). Both humans and LLMs naturally encode information asymmetrically based on frequency, context, and what stands out. Let's look at some everyday examples3:

Asking "What is the capital of Burkina Faso?" might stump you, while "Ouagadougou is the capital of which country?" is usually easier for those familiar with both.

You might struggle to name the capital of Côte d'Ivoire, but if someone mentions Yamoussoukro, you are more likely to identify its country.

And, for my final trick: Australia.

These examples reveal a basic cognitive principle: each conceptual association has both inbound and outbound connections, and these rarely have the same weight. This asymmetry in how we remember things—known in psychology as associative recall asymmetry—is well-documented and perfectly normal.

Still, to understand the mechanism through which this happens—and perhaps figure out whether it happens for humans and LLMs through similar mechanics—let’s go through an example in detail.

The Network’s Indra

Quick! What’s the capital of Mongolia? If you had to peak forward to this sentence to remember it’s Ulan Bator, but then felt an immediate jolt of familiarity, savour those feelings and try to verbalise the almost instantaneous proto-thoughts that darted around your cranium; it will help make the process described below less abstract.

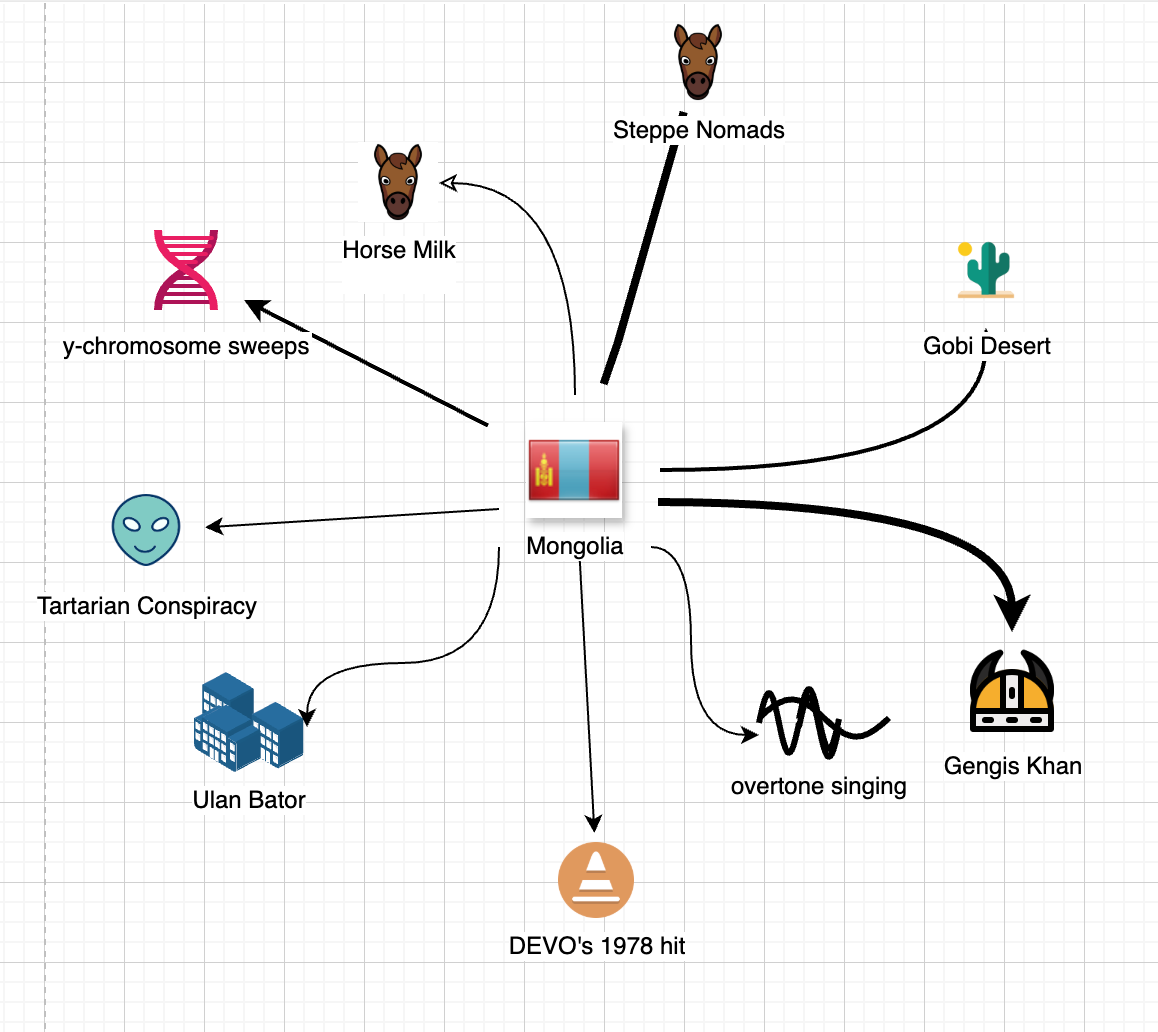

Also, try to remember which sort of associations popped into your mind for each, and to enumerate which ones were the stronger. For me, it was something like the graph below, with thicker arrow indicating stronger associations.

It is, once again, about flows through graphs.

Now, let’s try Ulan Bator. I am ashamed to admit that, without deliberately racking my brain, all I can come up with is this: all other connections go through Mongolia.

Instants later, I realised the buildings I had seen flashing before my mind’s eye in mind were actually Angkor Wat, in modern Cambodia.

This should provide enough of an intuitive grounding for the enterprising reader to figure out how larger, loop-rich conceptual networks4 influence saliency; adding context and attention to the mix should then be fairly trivial (hint: what other nodes with outbound connections to Ulan Bator activate when the assistant is prompted with a question about the country it is in?).

Reversing the curse

The fundamental issue underlying the so-called "reversal curse" in LLM research isn’t about models failing logical reasoning or generalisation—it’s a failure on the researchers' part to approach these models as they are: fluid analogy machines. This remains hard to accept from a culture where no one really got over the fact that lowly, messy neural networks can think, and still feels like AI should be the sort of symbol-shunting, optimally-decision-theoretic übersperg whose inner working you can behold given enough familiarity with formal systems.

AI alarmists—who miss fearing AI as game-theoretically optimal future agents—and Chomskyite AI skeptics—who dismiss them for not fitting neatly into symbolic logic—share this conceptual error. They ignore that intelligence, both human and artificial, operates not through neat syllogisms but through fuzzy conceptual networks that naturally produce asymmetrical recall patterns:

Formal logic always runs in emulation

Finally, hot tip: if you are planning to present a phenomenon as the stumbling block for LLMs to reason at human level, it pays to make sure humans do not present the same behaviour.

Notes

late-apocalyptic cognitive dissonance

You might be interested in this one book, an ethnography of an UFO rapture + apocalypse cult in the times straddling the date where the rapture was slated to happen. It’s the research from which the very term “cognitive dissonance” comes from.

the paper

LLMs trained on "A is B" fail to learn "B is A"

Lukas Berglund, Meg Tong, Max Kaufmann, Mikita Balesni, Asa Cooper Stickland, Tomasz Korbak, Owain Evans

BTW, you’ll notice many of the papers I cover here have Owain Evans among the authors.

This is not because I have a bone to pick with him: quite the opposite. I like the guy a bunch, and I think his research is among the least indecent in the whole MIRI-adjacent doomerdom.

Also, he is really curious, and this causes him not to muddy the water like too many of his peers—one big mistake per paper, but ONE: which of course makes his work the ideal victim of the present series.

everyday examples

I am informed that the examples in question are not, in fact, everyday for people with better things to do than memorising geographical trivia. I hope the Australian addition was sufficient to get the point across?

fuck me, son--teach more of these stackers of your elegance and word economy

not that I understood it, but I'm certain you flipped dipswitches